- Product

How does the RM Compare AI 'Learn' - New Interactive demo

Learning for a machine is not the same as it is for a human - conflating the two is problematic but all too common. The same is true for Intelligence. With our new Interactive report you can now see how the RM Compare Machine learns.

An interactive example for you to try

This Link takes you to a set of interactive reports from a completed session. In it 36 Judges completed 16 Rounds of Judging on 131 Items. You can read more detail about Reporting in the dedicated Help Centre section. There is also a Step-Thu available.

A description of the session is described in the short video below.

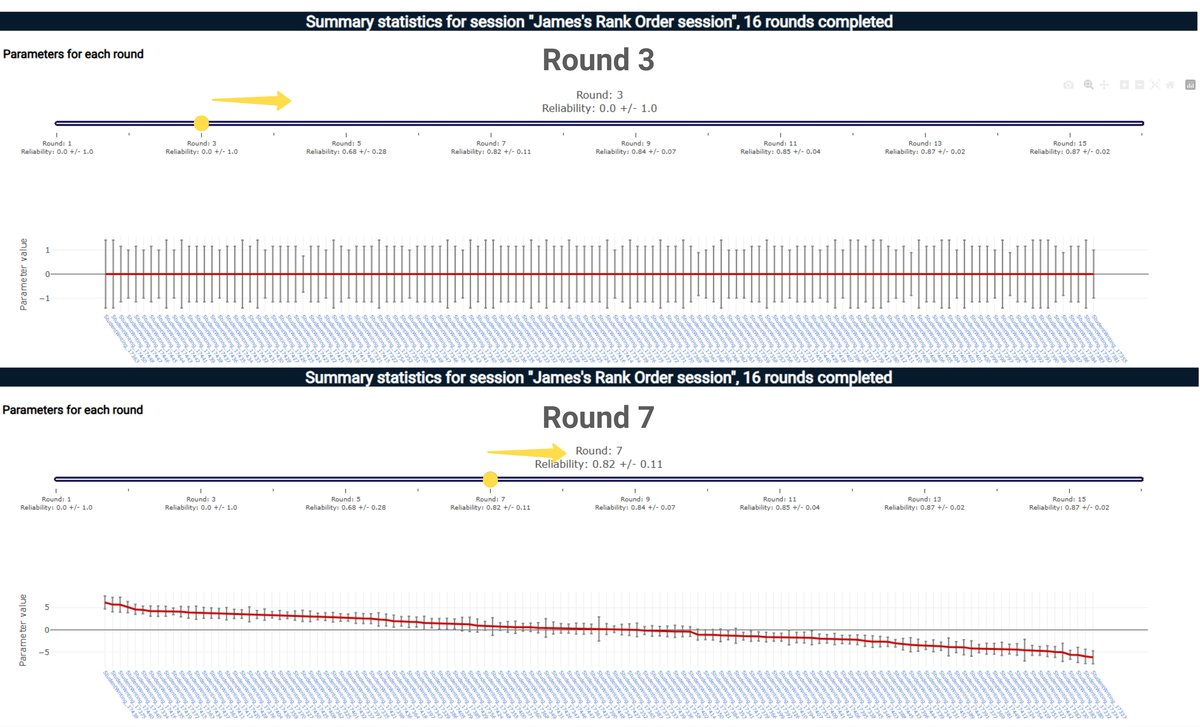

When you land in the Interactive Report you will be able to use the report 'slider' (Shown below in yellow) to move through the rounds. As you can see below in Round 3 the Machine Algorithm is still in the very early stages of learning. You can see however that the length of the confidence bars are not uniform. The contests that have taken place so far have provided enough data to not only understand that some items are better than others, but also to be more 'confident' on its understanding of relative value. In this regard the Machine is 'learning'.

'Goldilocks' pairing

The learning continues as the session progresses - it helps the Machine to optimise pairings to facilitate the most learning from each judgement - it pairs items that are not too different in quality, but also not too similar. Thy are paired in the 'goldilocks zone'.

By taking this approach the Machine learns fast. Every single judgement increases its learning and influences the next pairing that is surfaced. The complexity involved in large session with thousands of judgements taking place each second is quite something and could clearly not be replicated by an individual human.

Human intelligence is however central to the RM Compare process of course - it is real people making real judgements throughout. The Machine is there to assist, not to replace.

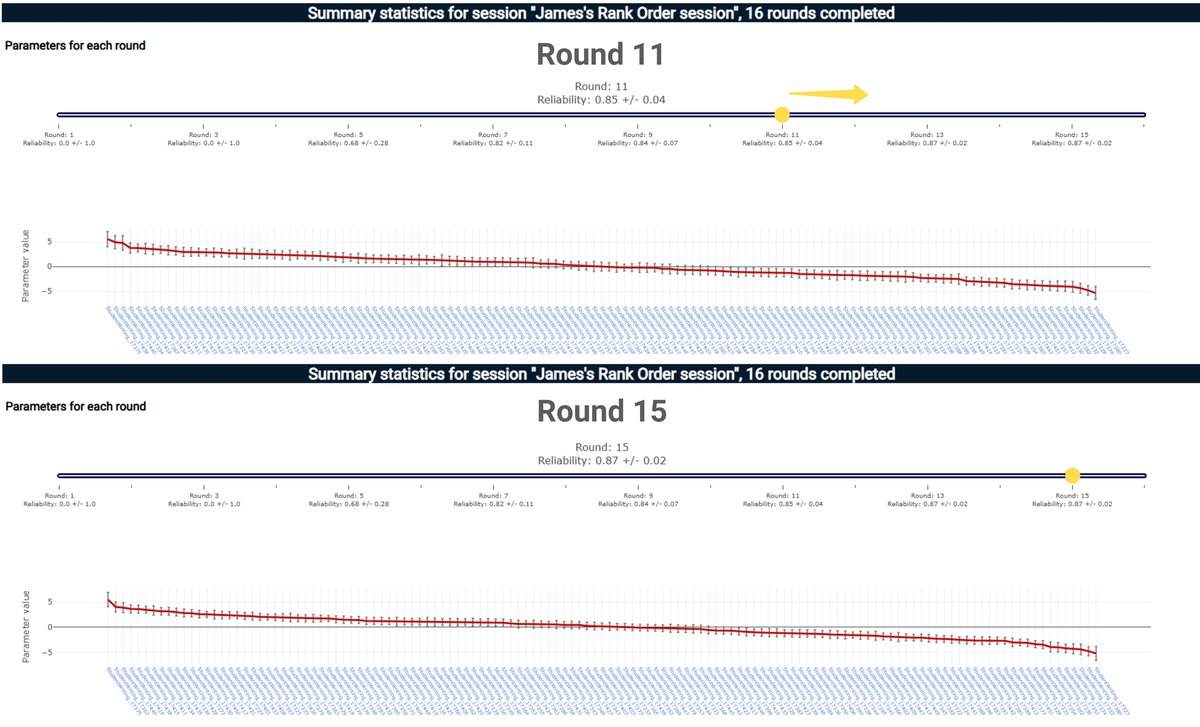

By the time we get to Round 11 the Machine is now very confident in the relative value of the Items. The session continues to Round 16, however, as expected the rate of learning slows as only marginal gains are made in reliability.

Conclusion

The future will belong not to machines or humans alone, but to their collaboration. RM Compare is designed with this partnership in mind: letting machine learning do what it does best—efficiently sorting and surfacing data—so humans can do what only they can: make wise, creative, context-rich judgements about what counts as “good”.

By appreciating the fundamental differences between learning and intelligence in people and machines, we can better design tools and processes—like RM Compare—that truly enhance performance, support meaningful learning, and prepare us all to thrive in the AI age