- Research

When Written Applications No Longer Signal Ability: "This is a crisis" (2/3)

The rise of generative AI has disrupted far more than how we write, it has fundamentally broken a mechanism that labour markets, universities, and employers have relied on for decades to identify talent. Groundbreaking new research from Princeton and Dartmouth reveals just how profound this disruption is, and why solutions like RM Compare's Adaptive Comparative Judgement are now essential.

The Collapse of Written Signals

In their November 2025 paper "Making Talk Cheap: Generative AI and Labor Market Signaling," economists Anaïs Galdin and Jesse Silbert provide the first rigorous evidence of how LLMs like ChatGPT have destroyed the informational value of written job applications. Using data from Freelancer.com, a major digital labour platform with millions of job posts and applications, they demonstrate a stark before-and-after picture.

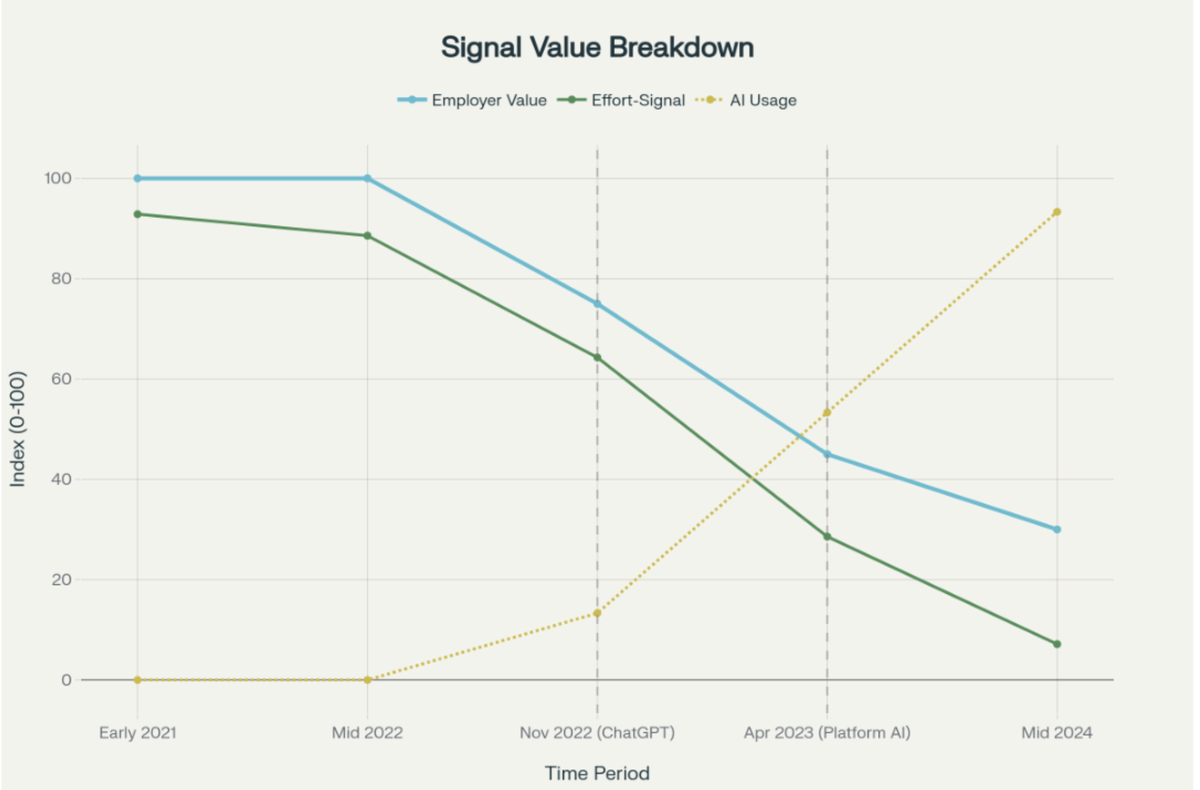

Before LLMs (pre-November 2022)

- Employers valued customised, job-specific proposals highly.

- Workers who invested effort in tailoring applications signalled their genuine ability and interest

- A one standard deviation increase in proposal quality had the same effect on hiring chances as reducing the bid price by $26

- High-ability workers were rewarded; the market was meritocratic

After LLMs (post-November 2022)

- The correlation between effort and proposal quality became negative for AI-written applications

- Employer willingness to pay for customised proposals fell sharply

- Customised proposals no longer predicted successful job completion

- The signalling equilibrium collapsed

As AI adoption rises, employer value of signals plummets and the effort-signal relationship breaks down.

The Theory Behind the Breakdown

The research builds on Nobel laureate Michael Spence's seminal 1973 model of labour market signalling. Spence showed that even if education or written communication doesn't directly increase productivity, it can still have enormous value as a signal if, and only if, it's costly for low-ability individuals to produce.

The mechanism is elegant: high-ability workers find it less costly (in time, effort, or psychological burden) to produce quality written work. In equilibrium, they invest more effort, send stronger signals, and employers can identify them. Everyone benefits from better matching.

LLMs shatter this mechanism. When anyone can produce a polished, highly customised application in seconds at zero cost, the signal loses all informational value. The labour market can no longer separate high-ability from low-ability candidates through written applications.

The Consequences Are Not Theoretical

Using sophisticated econometric modeling, Galdin and Silbert quantify exactly what happens when signalling breaks down.

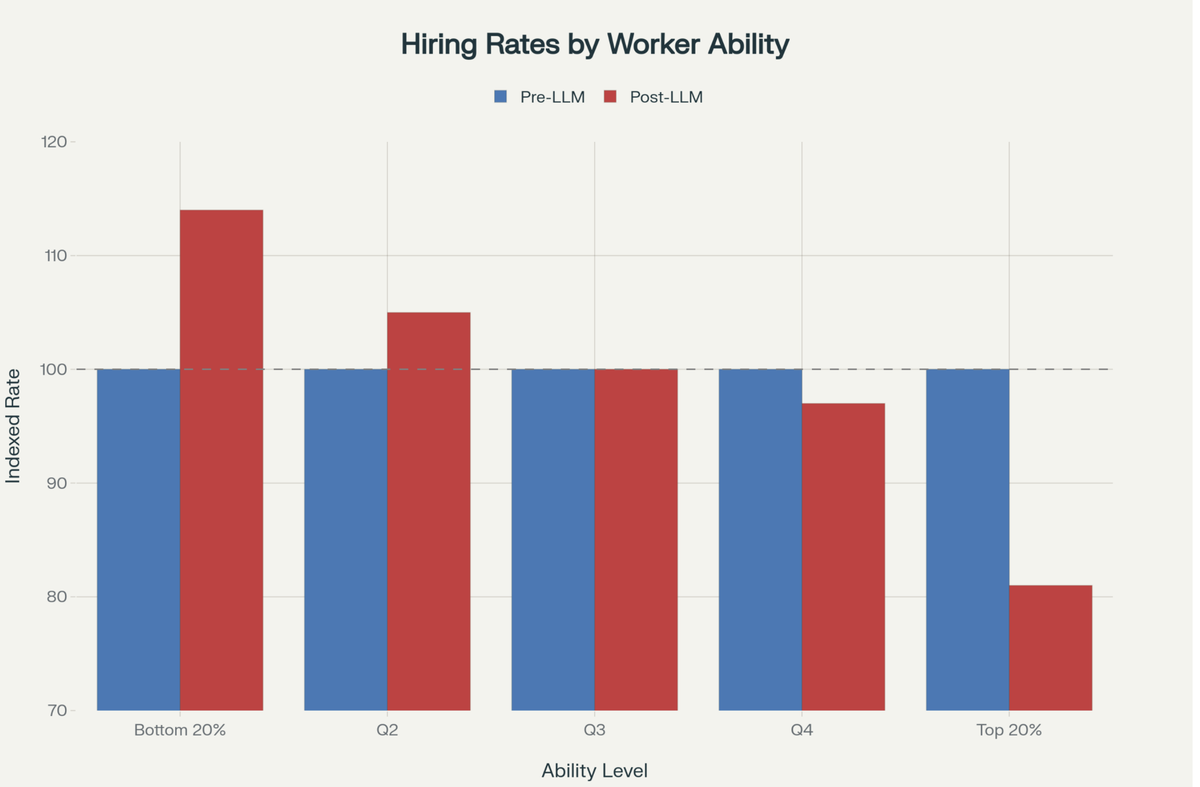

Hiring Becomes Dramatically Less Meritocratic

The researchers' structural model reveals the stark impact on who gets hired when written signals lose their value

Hiring becomes dramatically less meritocratic when written signals lose their value: high-ability workers hired 19% less, low-ability workers hired 14% more.

The numbers are sobering:

- Workers in the top quintile of ability are hired 19% less often

- Workers in the bottom quintile of ability are hired 14% more often

- Mid-tier workers see modest changes, with the gradient clearly favouring lower ability

This represents a fundamental reversal of meritocracy. The market that once rewarded genuine talent now systematically disadvantages it.

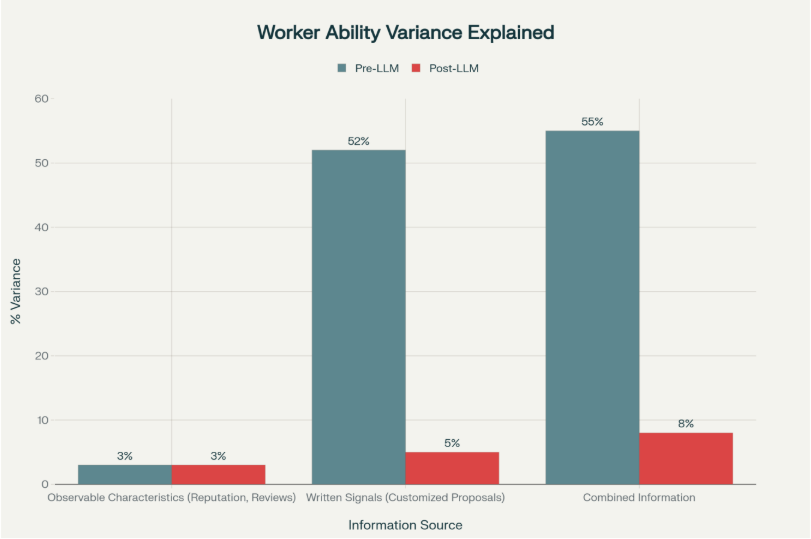

Observable Characteristics Cannot Fill the Gap

One might hope that employers could simply rely more heavily on observable characteristics such as reputation scores, past ratings, credentials. The research shows this is wishful thinking

Observable characteristics explain only 3% of worker ability - written signals were critical for identifying talent, but have lost 90% of their predictive power.

Observable characteristics like on-platform reputation and reviews explain only 3% of actual ability variation. Written signals, by contrast, explained 52% of ability variance in the pre-LLM period. After LLM adoption, written signals explain only 5% - leaving employers nearly blind to worker quality.

This is the crisis: the signal that mattered most has been destroyed, and there's nothing obvious to replace it.

Market Efficiency Falls Across the Board

The welfare consequences extend beyond just hiring patterns

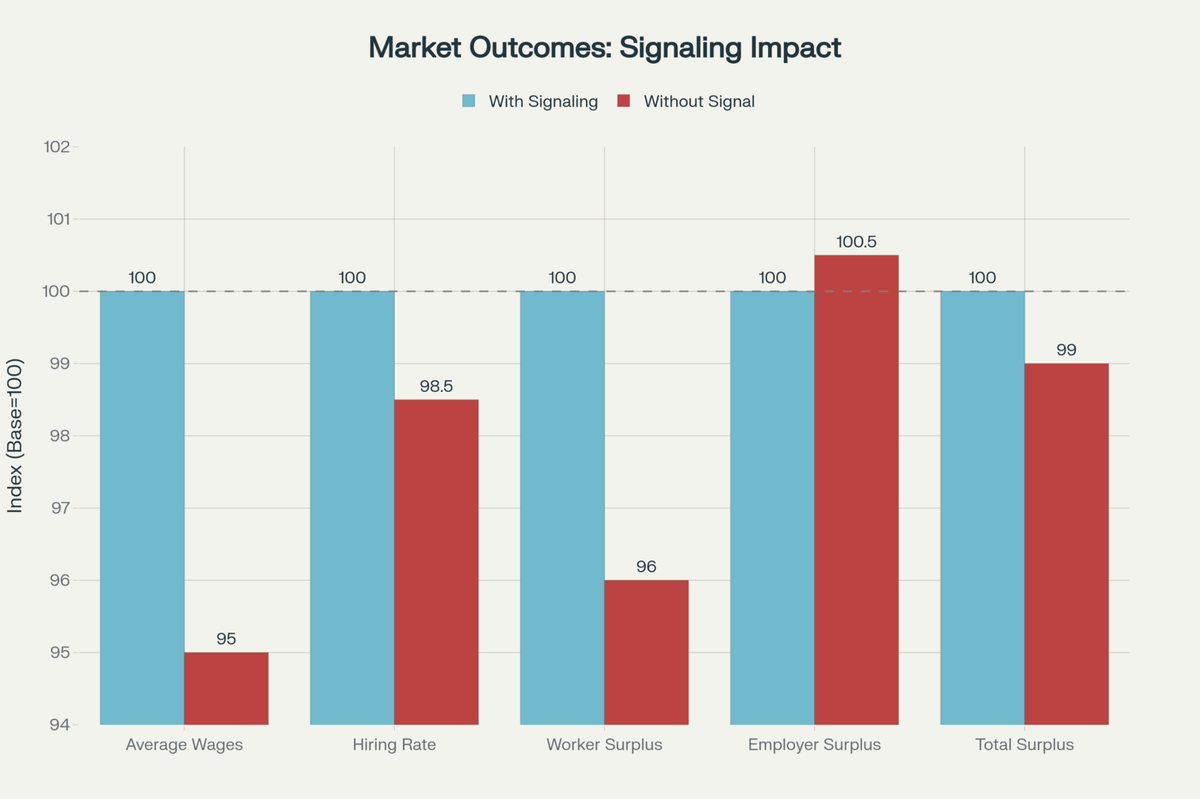

The efficiency cost of losing signals: workers bear the burden with 5% lower wages and 4% less surplus, while total market surplus falls 1%.

When signalling breaks down:

- Average wages drop 5% as competition shifts from quality to price

- Overall hiring rates decline 1.5% as employers struggle to identify good matches

- Worker surplus falls 4% from both lower wages and fewer successful hires

- Employer surplus barely changes (up 0.5%) - lower wages paid roughly offset lower-quality hires

- Total market surplus declines 1% - the market becomes less efficient overall

Workers bear the burden of this disruption. High-ability workers who could once credibly signal their value now compete on price against lower-cost, lower-ability workers—and lose.

Beyond the Labour Market: Universities, Admissions, and Assessment

The implications extend far beyond freelance platforms. The researchers explicitly note that their findings apply to any market where written communication has served as a costly signal:

- University admissions essays – Once a way for motivated, articulate students to stand out

- College coursework and assessments – Where the link between grades and genuine learning is now uncertain

- Professional applications – CVs, cover letters, grant proposals, and scholarship applications

- Workplace evaluations – Where written reports and self-assessments may no longer reflect true capability

One college career counsellor quoted in recent research observed: "We're now seeing students sending 300 applications a year. Sometimes it's 500 or even 1,000 applications from one student in one year. This wasn't possible before AI, and it's still accelerating."

The result? A "data plexiglass wall" where both applicants and employers hurl AI-generated content at each other with mounting frustration, neither side able to discern genuine signals from noise.

What RM Compare Offers: Performance-Based Signals That AI Cannot Fake

This is precisely where Adaptive Comparative Judgement becomes not just useful, but essential. Unlike written applications - which LLMs can now generate perfectly - actual performance on authentic tasks cannot be faked without cost.

RM Compare enables institutions, platforms, and employers to:

1. Replace Corrupted Written Signals with Authentic Performance

- Assess actual work samples, portfolios, or task completions

- Use comparative judgement to rank candidates holistically

- Build consensus from multiple expert judges rather than relying on a single AI-generated text

2. Restore Meritocracy Through Demonstrated Ability

- High-ability candidates reveal themselves through what they can do, not what they can write with AI assistance

- The cost structure that made Spence signalling work is restored: high-ability individuals genuinely find it less costly to produce quality work

- Employers and institutions can once again identify and reward genuine talent

3. Scale Fair Assessment Where Volume Overwhelms Traditional Methods

- Digital platforms face millions of applications - human review of each is impossible

- Traditional rubrics are gamed by AI-optimised text

- Comparative judgement efficiently surfaces quality through expert consensus, not predetermined criteria AI can reverse-engineer

4. Provide the "Gold Standard" Validation Layer for AI Systems

- RM Compare consensus rankings can calibrate and validate automated assessment

- When AI is used, it's anchored to authentic human judgement of actual performance

- This creates accountability and trust that standalone AI assessment cannot provide

RM Compare Case Studies

How Caine College of the Arts used RM Compare to improve admissions.

Learn how they overcame a number of assessment challenges with RM Compare to produce a fairer admissions system built on trust.

To produce an authentic, innovative talent identification solution, Amplify Trading partnered with Assessment from RM to use adaptive comparative judgement in a peer assessment format to assess candidates’ ability to present complex conclusions in actionable ways

The Research Makes the Case

The Galdin and Silbert paper provides robust empirical evidence for what many educators and employers have intuitively sensed: we cannot rely on written applications anymore. But the research also points the way forward.

The authors' structural model reveals that employers in the pre-LLM period were willing to pay $52 (about 79% of a standard deviation in bids) for a one standard deviation increase in worker ability. The demand for genuine ability is strong - what's missing now is a credible mechanism to identify it.

That's exactly what comparative judgement provides:

- Validity: Assessing actual work quality through holistic professional judgement

- Reliability: Achieving consensus through multiple comparisons, with reliability coefficients regularly exceeding 0.90

- Efficiency: Using adaptive algorithms to maximise information from each judgement

- Fairness: Reducing bias by aggregating diverse expert perspectives rather than relying on AI-gameable criteria.

From Theory to Practice: Integration Opportunities

The parallels between the Freelancer.com marketplace and educational/employment ecosystems are striking.

| Challenge | Freelancer.com | Education/Employment | RM Compare |

|---|---|---|---|

| Volume | Millions of AI-written proposals | Thousands of AI-written essays | Assessment of authentic tasks |

| Selection | Platform ranks by reputation | Relies on GPAs and test scores | Comparative judgement of portfolios |

| Quality Detection | Cannot distinguish quality | Cannot identify capable students | Expert consensus reveals ability |

| Outcomes | High-ability workers losing | Talented students disadvantaged | Merit-based selection restored |

RM Compare is ready to partner with:

- Digital labour platforms seeking to restore trust and meritocracy in hiring

- Universities needing authentic assessment methods in the age of AI

- Employers wanting to identify genuine capability beyond AI-written applications

- Professional bodies validating skills and competencies

The Path Forward

The research is clear: the LLM-driven collapse of written signalling is not a temporary disruption - it's a permanent structural change. Markets, institutions, and organisations that continue to rely on written applications, essays, or reports as signals of ability will increasingly make poor decisions, hire the wrong people, and undermine meritocracy.

The solution is equally clear: shift from assessing what people can write with AI to assessing what people can actually do. Adaptive Comparative Judgement makes this transition practical, scalable, and fair.

As Galdin and Silbert's research demonstrates, when signalling breaks down, everyone loses - workers, employers, and society. But when authentic performance replaces corrupted signals, we can restore both efficiency and fairness to the matching process.

The question for institutions is not whether written signals have lost their value, the evidence is conclusive. The question is: what will you use instead?

About this series

This is the second post in our series examining how generative AI has disrupted traditional assessment signals and what institutions can do to restore fair, merit-based evaluation.

- When Written Applications No Longer Signal Ability: The research evidence showing how AI has destroyed the informational value of written work

- The AI Shift and the Future of Fair Assessment: (This post) Why traditional assessment methods are failing and why comparative judgement offers a robust alternative

- Restoring Trust in Meritocracy: Real-world evidence from institutions successfully implementing fair assessment in the AI era