- Opinion

RM Compare – Assessment AS Learning: Developing Tacit Knowledge of 'What Good Looks Like'

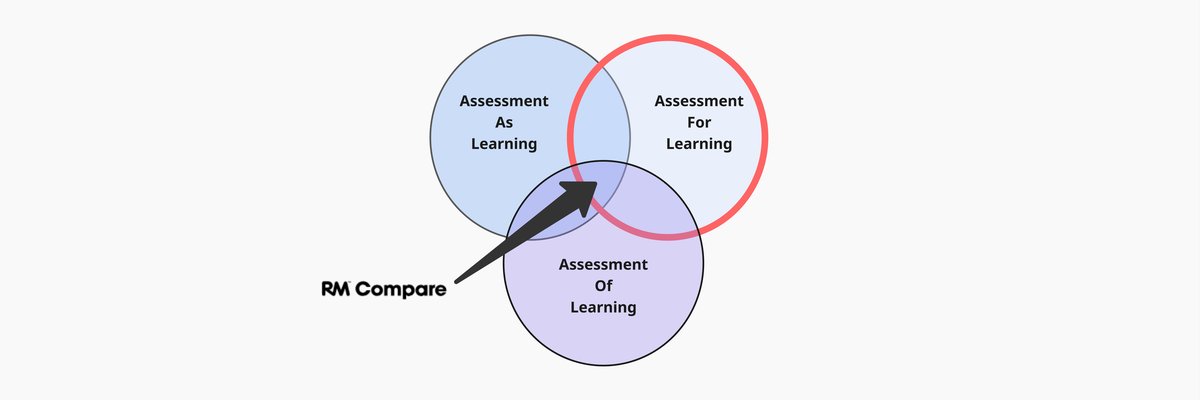

This is the first in a 3 part series looking at Assessment as AS learning, FOR learning and OF learning.

As digital transformation reshapes assessment, RM Compare stands out for its ability to turn assessment into a powerful learning experience. By using Adaptive Comparative Judgement (ACJ), RM Compare helps learners develop the tacit knowledge needed to recognise and produce high-quality work—empowering them to self-manage, self-check, and support their peers’ progress.

The Role of RM Compare in Developing Tacit Knowledge

Tacit knowledge—personal, experience-based understanding that is difficult to articulate— is foundational for students to recognise quality and improve their work. RM Compare leverages Adaptive Comparative Judgement (ACJ) technology, which enables learners to compare and evaluate work against peer submissions (past and present), thereby making implicit standards explicit through active judgment. This process helps students internalize what constitutes high-quality work without relying solely on explicit criteria.

Evidence from Purdue University: Learning by Evaluation

A major study at Purdue University’s ‘Design Thinking in Technology’ course involved over 550 first-year students and provides robust evidence of RM Compare’s impact. Half the cohort used RM Compare to review and compare anonymised work from previous students before completing their own assignments, while the other half followed traditional, teacher-led feedback methods.

Key Outcomes

- Significant Attainment Gains: Seven out of the top ten performers were from the ACJ group using RM Compare. Students exposed to a wide range of peer work via RM Compare performed significantly better than those using traditional approaches.

- Tacit Knowledge Development: By making comparative judgements, students internalised what ‘good’ work looks like. This process made quality standards visible, actionable, and not just teacher-defined.

- Equity of Impact: The improvement in attainment was seen across the full spectrum of student ability, including those typically harder to reach.

- Scalable and Enjoyable: Both students and teachers found the process intuitive and enjoyable, and the technology enabled scalable formative assessment without increasing teacher workload

By exposing students in the ACJ group to a broad range of work from former students, it helped them to identify what a ‘good’ piece of work looks like. These comparative decisions helped to also internalise the learnings of the exercise and course, and these learnings are then solidified as students verbalise their feedback to peers.

Insights from the University of Liverpool: Broadening Assessment’s Impact

The University of Liverpool’s School of Engineering conducted a large-scale study with 390 students to test RM Compare in three areas: peer assessment, feed-forward (reviewing previous work to understand assignment expectations), and grading.

Key findings

- Improved Understanding of Quality: 89% of students agreed or strongly agreed that judging with RM Compare gave them a better understanding of the quality of their own work.

- Deepened Topic Understanding: 61% agreed or strongly agreed that the process improved their general understanding of the topic.

- Better Than Traditional Methods: Over 70% of students preferred judging with RM Compare to simply viewing posters in a traditional exhibition.

- Scalable for Large Cohorts: RM Compare overcame logistical and cost challenges associated with traditional poster review exhibitions, making high-quality assessment feasible even for large student groups.

How RM Compare Supports Assessment As Learning.

Drawing on these studies, RM Compare enables:

- Exposure to a Range of Work: Learners see a breadth of real examples, calibrating their understanding of quality beyond isolated exemplars.

- Active Comparative Judgement: Making decisions about which work better meets criteria encourages deep engagement, reflection, and the articulation of tacit standards.

- Peer Feedback and Self-Reflection: The process of justifying choices and discussing results helps students internalise criteria, supporting self-regulation and peer learning.

- Efficient, Reliable, and Scalable Assessment: Both Purdue and Liverpool found RM Compare to be a scalable solution that maintains reliability and reduces teacher workload, even in large cohorts

Conclusion

The Purdue and Liverpool studies demonstrate that RM Compare transforms assessment into a collaborative, reflective, and impactful learning experience. By helping students internalise what ‘good’ looks like through comparative judgement, RM Compare empowers all learners to take ownership of their progress, supports equity of attainment, and enables scalable, high-quality assessment—turning assessment into a true engine for learning.

The studies here were in Higher Education, however the same principles should form an important part of all learning scenarios, for example in K12 and the workplace.