- Product

Designing Healthy RM Compare Sessions: Build Reliability In, Don’t Inspect It In

👉 How to use: Select your use case above to generate a prompt that references the full RM Compare Product Portal. Copy and paste into ChatGPT/Claude to build a robust session.

The best way to avoid the “we worked hard and reliability is low” moment is to design sessions so that health is baked in from the start. Healthy sessions are not accidents: they result from clear purpose, good task design, well‑briefed judges, and enough comparisons to let the model discover a shared scale of quality. This post turns what you now know about rank order, judge misfit and item misfit into concrete design principles you can apply before, during and after a session.

Start with Purpose and Stakes

Everything else should follow from a clear answer to: What decision or learning purpose is this session serving, and how high do the stakes feel for those involved?

Broadly:

- Exploratory / formative / peer learning. You can live with moderate reliability (around 0.7–0.8) if the main goal is feedback, discussion and professional learning, not high‑stakes grading. Sessions can be smaller and looser, with more emphasis on misfit as a conversation starter.

- Internal moderation / school‑level decision‑making. Aim for at least the high 0.8s, with enough items and judges to give a stable rank order that underpins curriculum and standards discussions.

- High‑stakes / external reporting. Design ambitiously for 0.9+ reliability, drawing on the evidence that well‑run ACJ sessions frequently achieve this, but recognising that it requires sufficient items, comparisons and judge preparation.

Being explicit about stakes helps you calibrate expectations and decide how much time and judging effort to invest.

Choose Items and Judges Deliberately

Items: range, clarity, and logistics

Research shows that CJ reliability benefits when items span a useful range of quality and represent the construct clearly, rather than being tightly homogeneous. In RM Compare:

- Aim for a meaningful spread. Include work that you expect to be clearly strong, clearly weak, and in between, so judges can calibrate their sense of quality; this increases true variance and helps the model differentiate items.

- Keep the construct focused. Use tasks and prompts that target a single overarching construct (e.g. “overall quality of analytical writing”) rather than mixing several unrelated criteria in one judgement; this supports consensus and reduces misfit.

- Check technical readiness. Make sure file formats, legibility and naming are clean (e.g. no “Scan_1234.pdf” for three different tasks); RM Compare guidance explicitly notes that good naming and consistent presentation make setup smoother and post‑hoc interpretation easier.

Judges: expertise, diversity, and support

CJ studies consistently find that both expert and peer judges can produce reliable results, but more comparisons per judge and clearer guidance improve stability.

When selecting and preparing judges:

- Match expertise to stakes. For high‑stakes uses, draw on teachers or assessors with relevant subject and age‑phase expertise; for peer or formative uses, trained students can work well but may need more structured briefing.

- Use diversity intentionally. A mix of perspectives (e.g. teachers from different schools) can strengthen construct understanding, but increases the need for calibration; judge misfit will be your mirror on whether that diversity is converging or fragmenting.

- Plan realistic workloads. Recent work indicates that in adaptive contexts, about 10 comparisons per item can often be enough for solid reliability, although you may need more depending on the context you are working in (for example is high stakes). Convert this into per‑judge workloads that are challenging but doable within your time window.

Get the Holistic Statement and Briefing Right

A clear holistic statement is one of the main theoretical underpinnings of reliability and validity in ACJ. RM Compare explicitly encourages broad statements that capture the essence of what should drive judgements, rather than long checklists.

For healthy sessions:

- Write a concise, positive holistic statement. For example: “Overall quality of argument and use of evidence in this essay” is usually better than a long bullet list of micro‑criteria.

- Test it with sample work. Before launching the session, share a small set of items and ask a few potential judges to talk through decisions; refine the statement if they focus on aspects you didn’t intend (e.g. presentation instead of reasoning).

- Brief judges on process and intent. Walk through: how to log in, how to make a judgement, what to do with “hard” pairs, and how their decisions will feed the report. This reduces accidental misfit due to confusion about the tool rather than the construct.

The more aligned judges are going in, the more informative judge misfit will be: it will highlight genuine differences, not just misunderstandings.

Design for Enough, Not Endless, Judgements

You rarely know in advance exactly how many judgements you will need, but the research gives useful ranges.

From meta‑analysis and implementation guidance:

- For reliability around 0.7, expect on the order of 8 - 10 comparisons per item

- For reliability around 0.9, plan towards 12 - 14 comparisons per item

- More comparisons per judge improve their own internal consistency as they become familiar with the task, up to a point; however, very large workloads can fatigue judges, potentially increasing misfit.

In RM Compare you control this mainly via:

- Number of items

- Number of judges

- How long you keep the session open

A simple planning move is to sketch a table (e.g. 60 items, 12 judges, aiming for about 15 comparisons per item) and then check whether that implies a realistic number of comparisons per judge within the available time.

Monitor Health While the Session Runs

Health is easier to protect than to repair. RM Compare’s reporting and monitoring tools let you watch how things are developing rather than waiting for an end‑of‑session surprise.

During the session:

- Track completion and coverage. Ensure judges are logging in and making judgements at the expected pace; stalled judges or items that have received far fewer comparisons than others can drag reliability down.

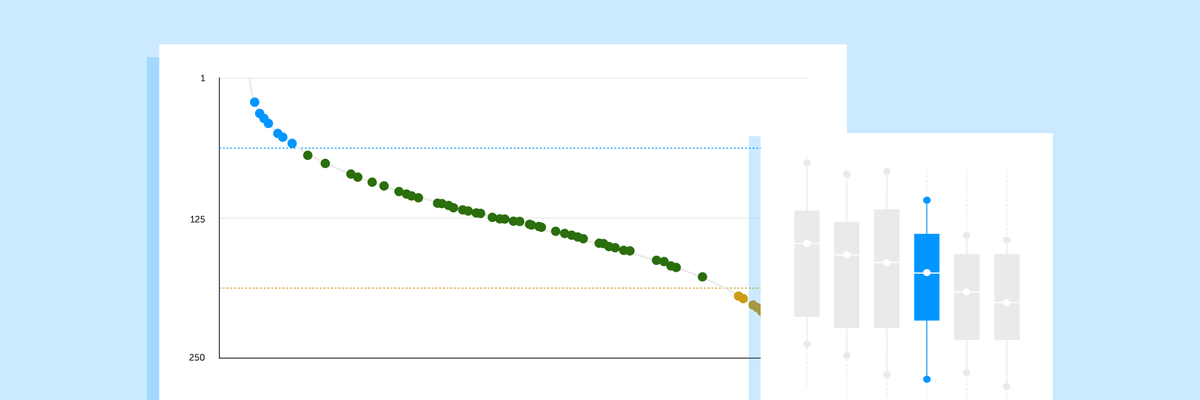

- Watch emerging reliability. Reliability will usually climb rapidly at first and then level off as additional comparisons add diminishing returns; use this shape to decide whether it is worth extending the session or whether you have enough for your purpose.

- Glance at early misfit patterns. Extreme judge misfit emerging quickly, especially combined with very fast or very slow decision times, may be a cue to check in with that judge, clarify expectations, or adjust roles.

These live checks turn judge misfit and item misfit from retrospective diagnostics into real‑time quality control.

Build a Simple Session Design Checklist

To make all of this practical, it helps to routinise design decisions. A lightweight checklist might look like this:

- Purpose and stakes

- Have we agreed why we are running this session and what kind of reliability is “good enough” for that purpose?

- Items

- Do we have enough items, with a realistic spread of quality, to tell a useful story?

- Are tasks, prompts and file formats clear and accessible?

- Judges

- Do we have enough judges, with appropriate expertise for the stakes?

- Is the planned number of comparisons per judge realistic for the time available?

- Construct and briefing

- Is the holistic statement clear, focused and tested on sample work?

- Have judges been briefed on both the construct and the tool workflow?

- Monitoring and follow‑up

- Who will keep an eye on reliability, judge misfit and progress during the session?

- How will we use misfit and the final rank order afterwards (e.g. moderation meeting, task redesign)?

Answering these questions explicitly before you press “start” dramatically reduces the odds of ending up with a low‑reliability session that nobody quite knows how to interpret.

Putting it all together

Healthy RM Compare sessions are designed around a clear purpose, a focused construct, deliberate choices about items and judges, and live monitoring of reliability and misfit. Research on CJ and ACJ shows that when these elements are in place, very high reliability is normal rather than exceptional. Combined with the interpretive tools from the earlier posts in this series, that gives you a practical recipe for sessions that are not just statistically strong, but educationally meaningful.