- Product

Understanding RM Compare Reports: Building Assessment Health, Not Chasing a Magic Number

👉 How to use: Select your use case above. The generated prompt will instruct the AI to analyze your reports using the "Three Lenses of Session Health" framework from the blog.

Most RM Compare users open a standard report, see a reliability value and a set of graphs, and then have to decide - often very quickly - whether their session “worked.” A single number is doing far too much heavy lifting in that moment. This first post reframes how to think about your reports. Instead of asking “Is my reliability high enough?”, the more useful question is “How healthy is this session, and what is it telling me to do next?”

What Your Standard Report Is Really Showing You

At its core, RM Compare is collecting many pairwise judgements and using a Rasch-style model to place every piece of work on a single underlying scale of quality. Rather than marking each script independently, judges decide which of two items better fits the construct you care about; over many comparisons across many judges, a rank order emerges that reflects shared professional consensus. The standard report is simply a set of different views on that same model.

Three elements appear every time:

- Rank order and parameter values – the ordered list of items from strongest to weakest, placed on a continuous scale (often with values such as +3 to −3 or +5 to −5).

- Judge data and judge misfit – information about who judged, how many judgements they made, how long they took, and how far their decisions diverged from the emerging consensus.

- Item data and item misfit – information about how often each item won or lost, its estimated position on the scale, and whether its pattern of wins and losses matches what the model would predict.

The important point is that these are not three separate verdicts about your session. They are three complementary perspectives on the same underlying story: how a group of judges collectively understood the quality of a set of items.

Why Reliability Is Only One Part of “Session Health”

Reliability in RM Compare reports is shown as a value between 0 and 1, where values closer to 1 indicate that if you repeated the session with similar judges and items, the resulting rank order would be very similar. Comparative judgement studies across subjects and age phases routinely report high reliability (often around 0.8–0.9 or above) when sessions are well-designed and sufficient judgements are collected. That makes it tempting to latch onto reliability as the single indicator of success.

However, treating reliability as a pass/fail threshold leads to predictable frustrations:

- A moderate reliability (for example, around 0.7) may still be entirely fit for formative purposes, or for generating rich professional dialogue, especially when time and resource are limited.

- A high reliability can mask important questions about how that reliability was achieved. For instance, if most judgements were made by a small subset of judges, or if certain outlying items caused unresolved disagreement.

A more helpful framing is to see reliability as one dimension of “session health” alongside:

- Whether the rank order makes intuitive sense to informed practitioners once they inspect the work.

- Whether judge misfit suggests a broadly shared construct, with only a small number of outliers or misunderstandings to explore.

- Whether item misfit draws attention to a manageable set of particularly complex or boundary items.

A session with “only” moderate reliability but a coherent rank order and well-understood areas of misfit may be more informative than a session with a headline reliability of 0.9 that few people have interrogated.

The Three Lenses of Session Health

Across this series, every report will be interpreted through three simple lenses: story, alignment, and complexity.

- Rank order: the story of quality

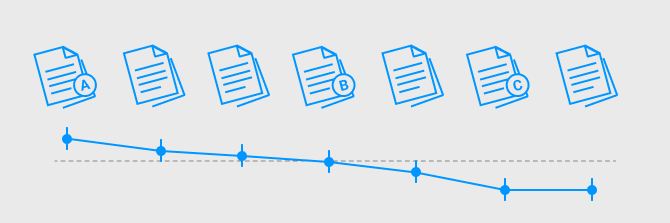

The rank order graph shows each item’s estimated quality on a single scale, with stronger items to the left and weaker ones to the right. The steepness of the connecting line segments reflects how large those differences are; steeper drops indicate clearer gaps in quality, while shallower slopes suggest more tightly bunched work. Parameter values provide a numerical version of these distances, but the key question is: does this story of quality make sense when you click into the work? - Judge misfit: alignment of professional judgement

The judge misfit view shows which judges consistently saw things differently from everyone else. Dots above the red line are judges whose pattern of decisions diverges enough from the model’s expectations to be flagged for attention—this could be because they didn’t share the same construct, didn’t fully understand the task or holistic statement, or because they brought a genuinely different professional perspective that deserves discussion. Judge data such as decision times and number of judgements gives extra context: very short times can suggest superficial or random decisions, while very long times can signal uncertainty or over‑analysis. - Item misfit: complexity within the work

The item misfit view highlights items whose win–loss pattern does not fit neatly with their estimated position on the scale. These are the pieces of work that divided opinion—boundary cases, ambiguous responses, or genuinely surprising performances. Rather than treating them as “bad data,” RM Compare’s own guidance frames misfit as describing the level of consensus in the session: non‑consensual items are often exactly the ones that generate the richest moderation conversations and the sharpest insights into task design.

By seeing each report through these three lenses, you can start to build a rounded picture of session health instead of relying on a single metric.

How to Use Reports While a Session Is Live

One under‑used aspect of RM Compare is that reporting is not just an end‑of‑session artefact; you can inspect data while the session is still in progress. This means you can intervene before problems harden into disappointments.

During a live session, you can:

- Monitor global completion and judge progress to ensure the planned number of judgements is actually being reached.

- Watch how reliability develops as more comparisons are made, helping you decide whether to keep the session open longer or whether the curve has flattened out and further judging will add little.

- Keep an eye on emerging judge misfit and decision time patterns so you can offer clarification or support to particular judges rather than discovering issues only at the end.

Thinking of the report as a live dashboard, not just a final scorecard, is one of the most powerful shifts you can make in how you work with RM Compare data.

From Data to Design: Preparing for Later Posts

This first post has been about mindset. The key shifts are:

- From “Did my session pass the reliability test?” to “How healthy is this session across story, alignment and complexity?”

- From “Reports are technical and intimidating” to “Reports are conversation starters and design tools.”

- From “Misfit means something went wrong” to “Misfit shows where understanding is most contested and therefore most interesting.”

In the next post, the focus will move from this broad framing into the rank order itself. You will see how to read the rank order graph and parameter values in detail, how to relate them to reliability, and how to judge whether the story your session is telling about quality is strong enough for its intended purpose.