- Opinion

The Reliability Paradox: Why the Future of Assessment will be more Nondeterministic

In a previous post, we explored the "Three Mirrors" of assessment - the Left, Right, and Centre views that together provide a complete picture of learner performance.

Today, we want to look deeper into the glass. Specifically, we want to discuss why the "Left Mirror" (Holistic Assessment) works so differently from the "Right Mirror" (Absolute Assessment), and why the future of high-stakes evaluation is becoming more nondeterministic.

👉 How to use: Select your scenario above to generate a prompt that helps you balance AI automation with Human Quality Assurance. Copy and paste into ChatGPT/Claude/Gemini.

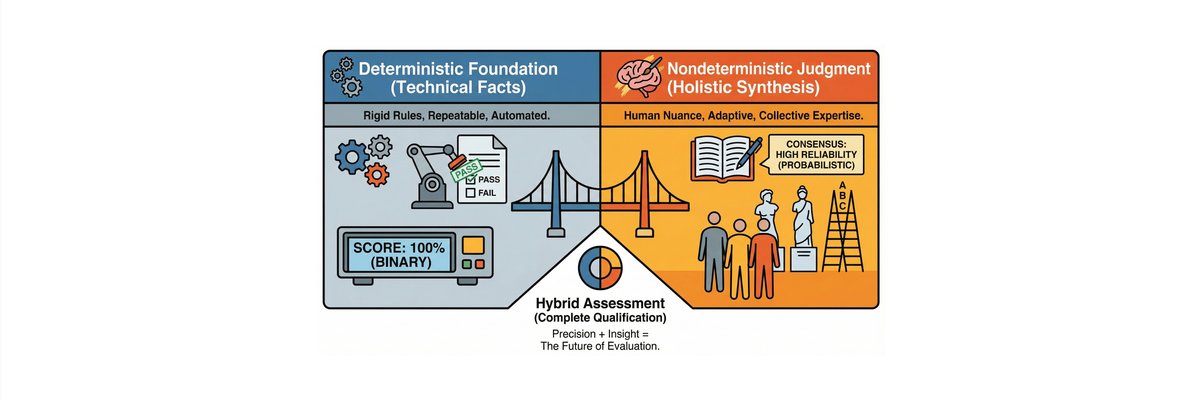

The Binary vs. The Probabilistic

Traditional assessment - the Right Mirror - is deterministic. Like a calculator, it relies on rigid, binary rules: "If X is present, give Y points." This is the bread and butter of our parent company, RM Assessment. It is essential for testing technical facts and foundational knowledge. It is the "blind spot" check that ensures basic standards are met.

But as we move toward the Left Mirror, we are looking at something far more complex: human synthesis, creativity, and professional nuance. This is where RM Compare operates. It is nondeterministic. A nondeterministic product doesn't follow a straight line; it follows a probability. Instead of a fixed rubric, it uses Adaptive Comparative Judgment (ACJ) to build a consensus. The "behavior" of the software evolves based on the subjective choices of the experts using it. By embracing this "noisy" human variability, we actually reach a more reliable "truth" than a checklist ever could. We call this the Reliability Paradox.

Behind the Scenes: How Our Product Team Builds the "Left Mirror"

Designing for nondeterminism requires a total rethink of how we build software. As a product team, we approach this through three core pillars:

- Designing for "Productive Friction": Most apps want you to click faster. We don't. We design the UI to make you pause and engage your professional "gut feeling." We want the nondeterministic spark of human expertise, not a robotic reaction.

- Live Algorithmic Calibration: Because we don't use "virtual judges," our focus is on how the system handles real-world human variability. Our team builds the logic that identifies "misfit" - where a judge’s choices diverge from the emerging consensus. The algorithm then automatically adjusts, scheduling more pairings to resolve that "noise" and find the truth.

- Transparent Complexity: We know that "nondeterministic" can feel like a "black box." Our mission is to make it a "glass box." We build live telemetry and "Misfit" reports so you can see exactly how the consensus is forming in real-time.

The Hybrid Reality: A Multi-Modal Future

The future isn't a choice between these two worlds, it’s a Hybrid Model. The most sophisticated organizations are now "Measuring the Measurable" and "Judging the Unmeasurable" in a single flow:

- The Right Mirror (Deterministic): Auto-marking handles technical foundations with binary precision.

- The Left Mirror (Nondeterministic): RM Compare handles holistic synthesis, ranking work based on collective expert judgment.

- The Centre Mirror (The Anchor): RM Echo acts as the authenticity check, ensuring the work is original - a vital step in the era of Generative AI.

The Organizational Shift: From Compliance to Competence

Adopting this "more nondeterministic" approach is a cultural leap. It requires a shift from Compliance (did they check the box?) to Competence (is the work actually good?).

- Trust the "Collective Eye": Move from trusting a piece of paper (the rubric) to trusting your people.

- Disagreement is Data: In a deterministic system, disagreement is a "fail." In a nondeterministic system, it’s a signal that highlights the most complex or "borderline" work.

- Manage the Convergence: Success isn't an instant data point. It’s the journey of your team reaching a shared understanding of what "excellence" looks like.

Conclusion

As AI continues to automate the "deterministic" tasks of the world, our uniquely human ability to perceive quality and nuance is our most valuable asset.

We aren't building RM Compare to replace human judgment with an algorithm. We are building a nondeterministic engine to amplify it. By combining the precision of the Right Mirror with the insight of the Left, we aren't just looking "Back to the Future" - we are building the future of assessment today.