- Opinion

The Skills Imperative 2035: Why the Future of Assessment Can’t Be a Tick-Box Exercise

Heading

- NFER’s Skills Imperative 2035 predicts that by 2035 the labour market will be driven by six Essential Employment Skills, including creative thinking, collaboration and complex problem solving, rather than routine, easily automated tasks.

- These human, “hard‑to‑measure” skills are exactly the ones traditional tick‑box mark schemes and rubrics struggle to assess validly, often narrowing teaching to hoop‑jumping rather than genuine creativity and judgement.

- The report explicitly calls on schools and policymakers to evaluate tools for assessing EES and to monitor students’ development systematically, turning this into a concrete assessment and data challenge, not just a curriculum aspiration.

- RM Compare’s Adaptive Comparative Judgement enables reliable, holistic assessment of creative work, collaboration outcomes and multi‑modal portfolios, providing the systematic, trackable data on complex skills that NFER argues the system now needs.

👉 How to use: Select your goal above to generate a strategy for assessing "Hard-to-Measure" skills. Copy and paste into ChatGPT, Claude, or Gemini.

Introduction

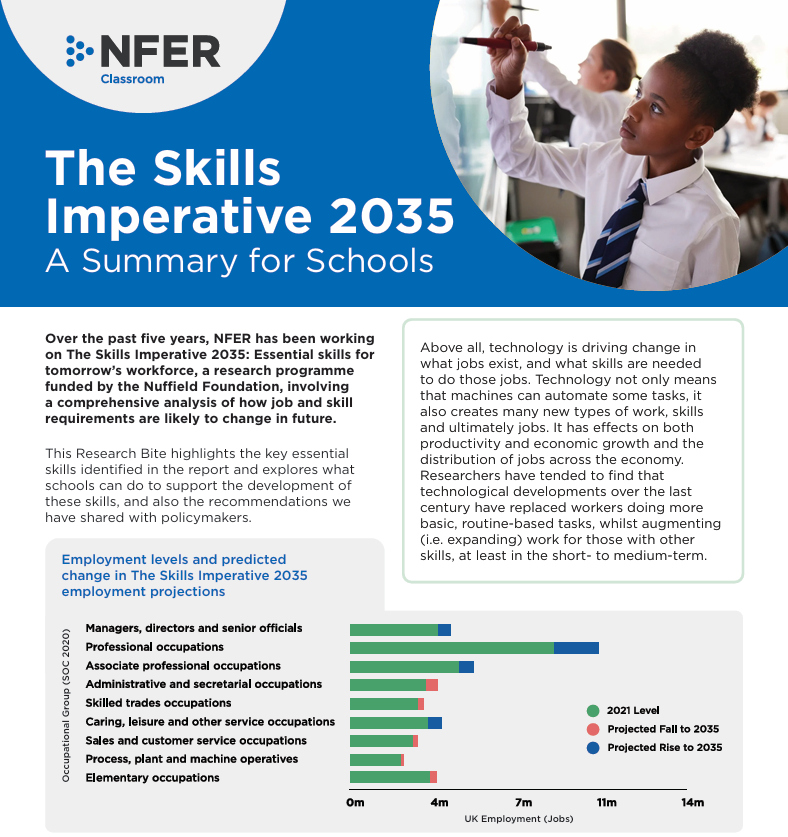

If you have been following the conversation around the future of education, you know the refrain: the world is changing, and schools need to adapt. But the latest report from the National Foundation for Educational Research (NFER), The Skills Imperative 2035, moves beyond generalities and puts hard data behind the challenge.

The report’s conclusion is stark: by 2035, the labour market will be driven not by routine tasks, but by six "Essential Employment Skills" (EES).

At RM Compare, we read this report with a sense of recognition. The skills identified as critical for the future are exactly the ones our community has been championing for years—and they are exactly the skills that traditional mark schemes fail to capture.

NFER Skills Imperative Report

Summary for schools

The "Hard-to-Measure" Problem

The NFER report highlights that as automation takes over routine tasks, human value will shift to skills that are inherently subjective and complex:

- Creative Thinking: Defined as "the capacity to generate fresh ideas... and challenge assumptions".

- Collaboration: Described as "weaving together diverse perspectives" to achieve shared goals.

- Problem Solving: The ability to tackle "complex, ambiguous problems... when there may be no single right answer".

Here lies the problem. We all agree these skills matter. But how do you mark "collaboration" with a rubric? How do you validly assess "fresh ideas" with a tick-box mark scheme?

When we try to force these nuanced skills into rigid assessment frameworks, we often kill the very creativity we are trying to measure. We end up teaching students to "jump through hoops" rather than to think.

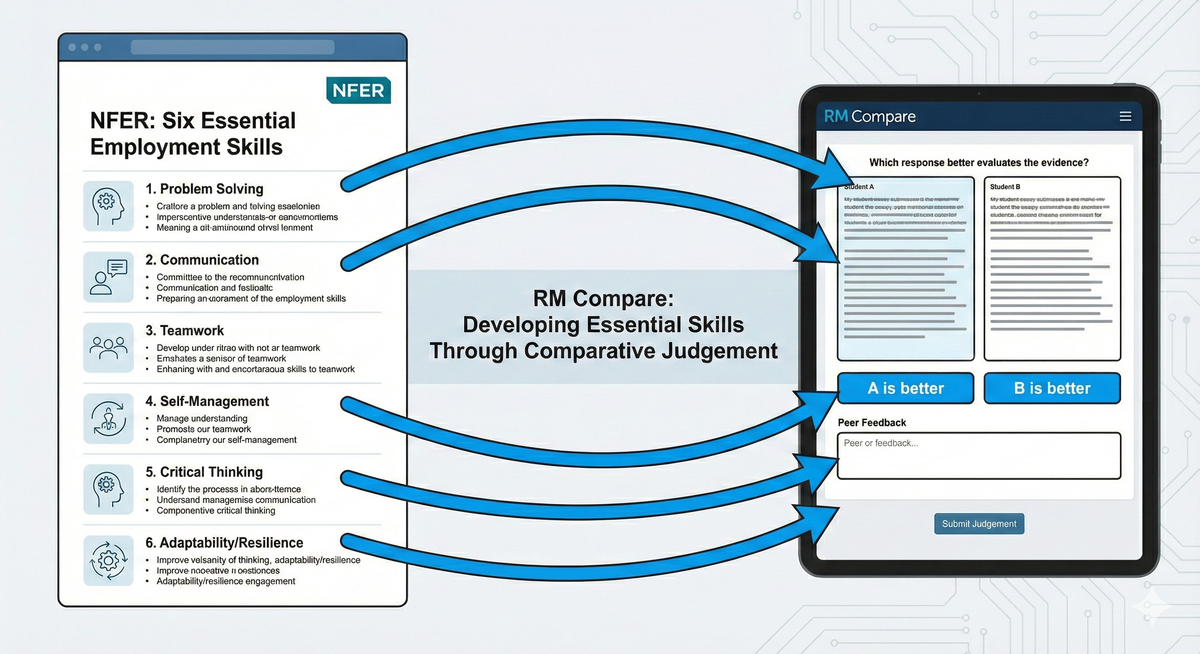

Answering the Call for New Tools

This is where the NFER report becomes a call to action. It explicitly recommends that schools and policymakers "Evaluate tools for assessing EES [and] monitoring students' skills gaps".

It also calls for schools to "monitor students' EES development systematically".

This is precisely why RM Compare exists.

We built RM Compare on the principle of Adaptive Comparative Judgement (ACJ) because we believe that human judgement is better at recognising "quality" than a rubric is at defining it.

- Assessing Creativity: Instead of checking if a student used "three adjectives," RM Compare allows judges to look at two pieces of creative work side-by-side and decide which one is more powerful. This holistic view respects the "fresh ideas" the NFER report calls for.

- Measuring Collaboration: Our users are already using RM Compare to assess multi-modal portfolios and group projects. By comparing the outcomes of collaboration, we can generate reliable data on these "soft skills" without reducing them to a checklist.

- Systematic Monitoring: The NFER wants systematic tracking. RM Compare provides a reliability score and data trail for every judgement, turning subjective teacher intuition into robust, trackable data over time.

Don’t Wait for 2035

The report notes that the pace of change has "accelerated significantly in the last three years". The shift isn't coming; it's already here.

If we want to prepare students for the 2035 workplace, we cannot rely on the assessment tools of 1935. We need assessment that values the complex, the creative, and the collaborative.

The NFER has identified the destination. RM Compare provides the vehicle to get there.