- Research

Turning Research into Action: The OODA Loop at the Heart of RM Compare’s Innovation

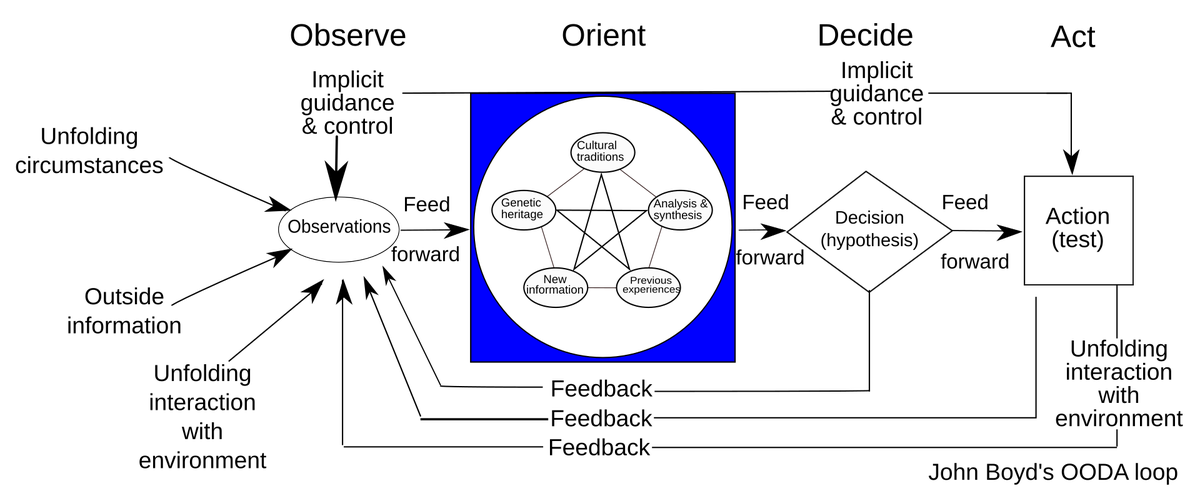

Innovation isn’t a straight line - it’s a dynamic cycle. Nobody grasped this better than John Boyd, the US Air Force Colonel and visionary strategist behind the OODA loop. Boyd’s model - Observe, Orient, Decide, Act - was developed to help fighter pilots out-think and out-manoeuvre their opponents. Over time, it became a blueprint for learning organisations everywhere: success comes from cycling through these stages faster and smarter

Responding to research evidence - an example

A recent study by Kjetil Egelandsdal et. al. (2025) explored the practical feasibility of Adaptive Comparative Judgment (ACJ) as a summative assessment method in legal education, focusing on a Property and Intellectual Property Law course at a Norwegian university. The learnings are critical to helping improving RM Compare.

O: Observation—Learning from the Latest Research

Applying the OODA loop begins with observation. We’re looking outward, learning from the latest evidence, and tuning in to the reality our users face. This particular research study provided exactly the kind of insight that sparks change at RM Compare. The researchers put adaptive comparative judgement (ACJ) under the microscope, running sessions both in simulation and in real-life classrooms.

What did they observe? ACJ, in principle, is robust and fair, especially when enough judging rounds are completed. But practical barriers like session time pressures and uneven judge participation can hold back reliability. The takeaway: even sophisticated digital assessment systems need built-in ways to monitor and respond in real time, ensuring reliability isn’t left to chance.

O & D: Orientation and Decision—Our Approach to Prioritisation

Boyd’s next steps - Orient and Decide - means interpreting what we’ve seen and picking the right priorities. The Kjetil Egelandsdal et. al. (2025) study made clear: if session monitoring and judge engagement aren’t front and centre, assessment outcomes might fall short. That’s why our latest developments focus on making real-time progress, monitoring, and feedback not just available, but actionable.

Every new research insight prompts a period of ‘prioritisation’ - deciding what gets built next, and which improvements will make the biggest difference for teachers, learners, and assessment creators.

A: Act—Lowering the Cost of Change (and Speeding It Up)

To keep the OODA loop agile, you need to be able to act quickly and with confidence. That’s the thinking behind our major front-end rebuild (news coming soon!). As detailed in How We Build RM Compare, we’re slashing the time and effort it takes to release improvements. New tools - like enhanced dashboards, automated judge reminders, and smarter reliability alerts - can now go live faster, benefiting users and responding directly to emerging evidence and needs.

By lowering the cost of change, we’re able to act on new observations and decisions at speed. This is continual discovery and continual delivery in practice: always listening, always learning, always improving.

Completing the Loop—And Starting Again

John Boyd stressed that the real advantage goes to those who don’t treat thinking, learning, and action as a one-off, but embrace the loop. At RM Compare, every feature, every refinement in session monitoring, reliability feedback, or judge engagement, comes from running the OODA loop repeatedly. Research delivers new observations, we orient ourselves and prioritise, act quickly with our adaptive technology, then scan the environment again for what’s next.

RM Compare: Research-Informed, User-Driven

We value independent research because it gives us sharper observations and keeps us honest, always challenging our assumptions and helping us see with fresh eyes. The speed and agility of our OODA-inspired process means that research findings don’t sit on a shelf - they’re used to make assessment more reliable, fair, and dynamic.

Help Us Make RM Compare Even Better

None of this is possible without a community committed to continual learning and improvement. We invite every RM Compare user to be part of the loop: ask questions, share feedback, propose ideas, and be ready to see your insights shape the product, sometimes within weeks, not months.

We’re proud to be building RM Compare as a living example of the OODA loop in education technology. Together, we can outpace change, respond to new evidence, and deliver the best possible solutions for assessment and learning.

Ready to join us? Get involved, share your observations, and help RM Compare keep cycling through the OODA loop, making assessment stronger, smarter, and more responsive than ever.