Training & FE

For Training Providers & FE Colleges

Validate Internal Assessment.

Protect Your Funding.

Inconsistent marking across sites puts your Direct Claims Status at risk. RM Compare allows you to standardise judgement, upskill staff, and defend every grade decision to Awarding Organisations without the travel costs or logistics.

100%

Audit Trail

Prove every decision to Ofqual0.9+

Reliability Score

Data-backed assessment quality100%

Coverage

Every assessor calibrated to the standardThe Challenge

Why Traditional IQA is Failing

With the 2025 apprenticeship assessment reforms shifting more responsibility to training providers - and End Point Assessment (EPA) now including provider-marked components - the risk of inconsistency has never been higher

Your trainer in Manchester marks portfolios differently to your trainer in Bristol. Traditional IQA relies on 'dip sampling' - checking 10% of work and hoping it represents the whole. But in vocational assessment, where portfolios are complex and rubrics are subjective, small sample sizes miss systematic bias. The result? Drift that compounds over time, leading to grade inconsistency and funding clawback risk when the External Quality Assurer (EQA) spots the gap..

Assessment in Action

"ACJ Transformation"

Get the basics of Adaptive Comparative Judgement

New to Comparative Judgement? Watch this 60-second explainer to see how it works—and why it's more reliable than rubrics.

An experts view

Want the academic proof? Hear from Professor Scott Bartholomew (Purdue University) on why ACJ outperforms traditional marking for vocational assessment.

Remote Standardisation

Stop "Meeting", Start Judging.

You don't need to fly 50 trainers to a hotel to agree on a standard. Run a National Standardisation Window entirely online.

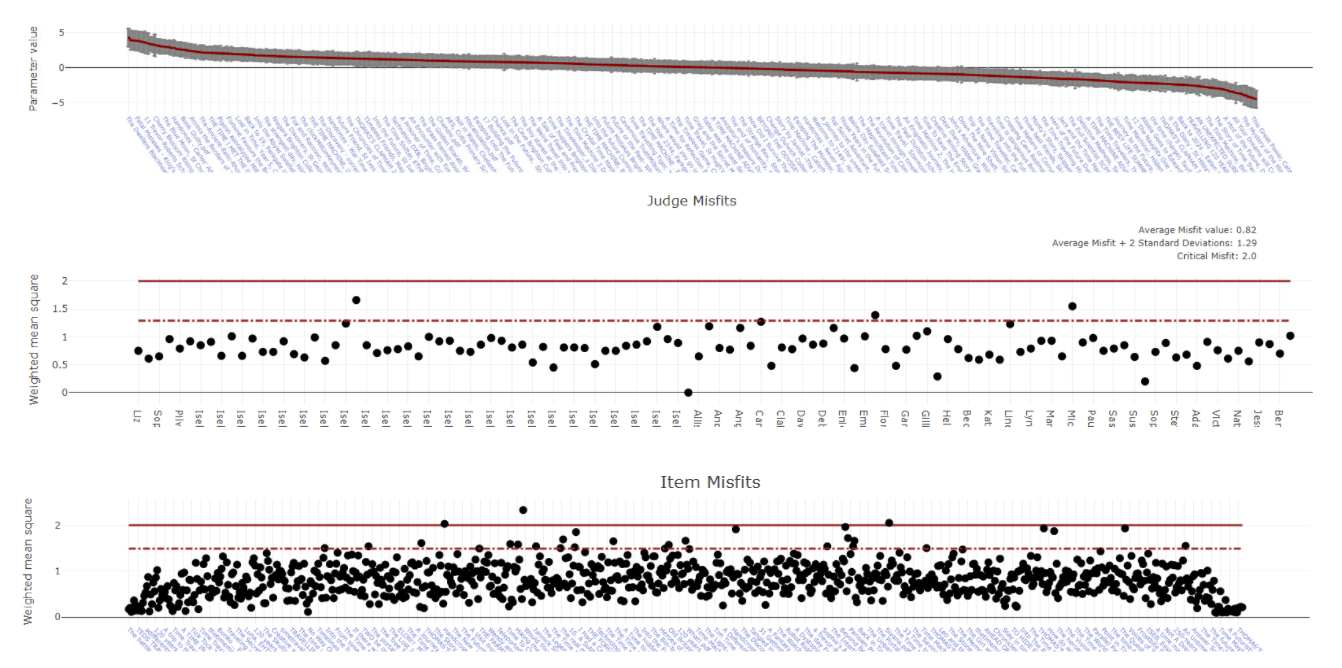

Upload samples of "Distinction" work from every site. Trainers judge pairs of portfolios anonymously (A vs B). Our algorithm builds a consensus "Quality Ruler" in minutes, instantly identifying which trainers are out of sync.

You get a live dashboard showing every assessor's position relative to the agreed standard. Those marking too generously get flagged for immediate retraining. Those marking too harshly get coaching. No guesswork. No assumptions. Just data.

Staff Upskilling

A "License to Assess"

Don't let new staff mark live EPA assessments until they are ready. RM Compare acts as a training flight simulator for assessors.

✈️ Training Flight Simulator

New staff judge pre-seeded "Gold Standard" examples. They receive instant feedback on their accuracy, allowing them to calibrate without risking real learner grades.

✅ Regulator Readiness

Only certify staff to mark once they hit a reliability score of 0.9 - the threshold used by exam boards for high-stakes qualifications. Traditional IQA has no comparable benchmark, leaving you vulnerable to challenges..

Works for Vocational Evidence

The gold standard for subjective, practical, and portfolio-based assessment.

"RM Compare allowed us to see for the first time exactly where our marking standards were drifting. We fixed it in one afternoon, saving us months of headaches."