- Reporting

Understanding the meaning of Parameter Values in an RM Compare report

In comparative judgement (CJ) sessions, parameter values are numerical representations of the relative quality or difficulty of the items being judged. These values are derived from the collective decisions of judges who compare pairs of items and decide which one is superior. After several rounds of such judgements, a rank order of the items is established, with the parameter values indicating the relative distances between the items.

A simplified explanation

- Parameter Values: Think of parameter values as scores that represent how good an item is compared to others. These aren't scores given directly by the judges but are calculated based on the judges' decisions during the CJ process.

- Rank Orders: The rank order is the final list of items, arranged from the best to the worst (or from the easiest to the hardest, depending on the context). The rank order is determined by looking at all the judges' decisions and calculating which items were generally preferred over others.

An example

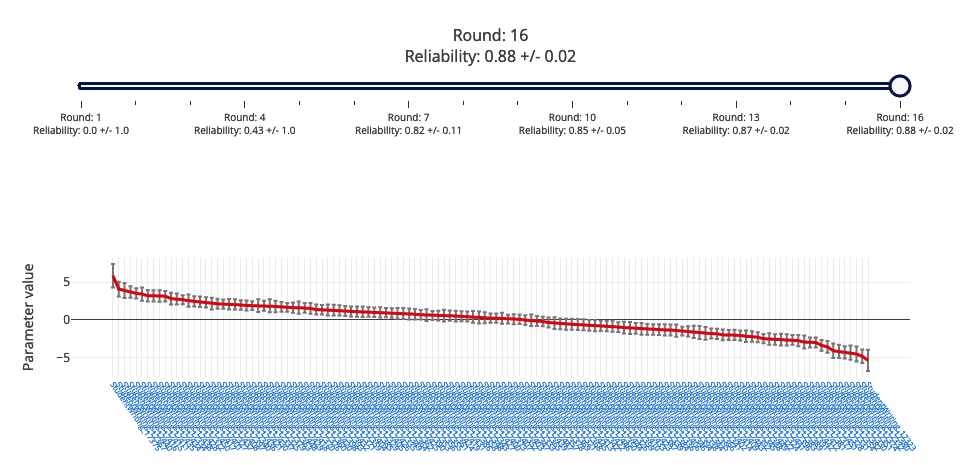

In the session below we can see that the range of Parameter values is from 5 to -5. This suggests that there is a wide range of quality among the items assessed. This range is not inherently good or bad; it simply reflects the spread of the items' qualities or difficulties as determined by the judges' comparisons.

Things to consider

The critical aspect to consider is whether the range of parameter values allows for a clear and meaningful differentiation between the items. If the range is too narrow, it might not sufficiently distinguish between the best and worst items. Conversely, if the range is too wide, it could indicate that some items are outliers or that there may be inconsistencies in the judging process.

The usefulness of the parameter values depends on the context of the assessment and the goals of the session. For example, in educational assessments, a wide range of parameter values could be useful for identifying which student responses demonstrate higher levels of competence or understanding. In other contexts, such as quality control, a narrower range might be preferable to ensure consistency in the items being evaluated.

Ultimately, the parameter values should be interpreted in light of the assessment's objectives and the consistency of the judging process. If the judging process is reliable and the parameter values align with the intended outcomes of the assessment, then the range of values can be considered appropriate for the session.

What can influence the difference in parameter values in items?

- Number of Comparisons: The number of comparisons made during the judgement process can influence the accuracy of the parameter values. More comparisons can lead to a more accurate estimate of the item variance.

- Item Difficulty and Person Ability: In the context of the Rasch model (used by RM Compare) item parameters represent the difficulty of items while person parameters represent the ability or competence of the individuals being assessed. The higher a person's ability relative to the difficulty of an item, the higher the probability of a correct response on that item.

- Judges' Decision-Making Process: Judges' decisions can be influenced by various factors, including their personal values, biases, and the complexity of the decision-making process. These factors can indirectly influence the parameter values of the items being judged.

- Uncertainty and Measurement Error: The level of measurement error is not uniform across the range of a test but is larger for more extreme scores (low and high). Uncertainty about item parameters can also influence the parameter values.

- Statistical Models and Algorithms: The statistical models and algorithms used to estimate the parameter values can also influence the results. For example, the Rasch model (used by RM Compare), the Bradley-Terry-Luce model, and Thurstone's Law of Comparative Judgment all have different parametrizations, which can lead to different parameter values.

- Context and Construct Validity: The context in which the comparative judgement is made, and the construct validity of the measure can also influence the parameter values. This includes the relevance and utility of the score inferences and actions, as well as the shared consensus across judges.

In summary, the difference in parameter values in items in comparative judgement sessions can be influenced by a variety of factors, including the number of comparisons, item difficulty and person ability, judges' decision-making process, uncertainty and measurement error, statistical models and algorithms, and context and construct validity.