- Opinion

The Validation Layer: Why AI Needs a Human Anchor to be Safe

Key points

- Schools, exam boards and employers are drifting into a closed loop of “machines grading machines”, with AI‑generated work being marked by AI, while human understanding of real quality quietly drops out of the system.

- In complex, subjective domains there is no single ground truth, so training AI on rubrics only teaches it to mimic surface rules (keywords, structure), not to recognise genuine quality, making black‑box errors and “thesaurus trap” hallucinations hard to spot.

- RM Compare’s Validation Layer uses Adaptive Comparative Judgement on a sample of work to create a human consensus benchmark, then overlays this with AI scores to detect “misfit” cases where the model diverges from expert judgement.

- This human anchor lets organisations let AI handle volume while automatically flagging the risky 10–20% of cases for review, turning AI from an unsupervised decision‑maker into a scalable but continuously validated assistant.

👉 How to use: Select your use case above. The generated prompt will instruct the AI to design a strategy that uses RM Compare as the "Human Anchor" to validate your automated tools.

Introduction

We are currently living through an "Assessment Arms Race."

On one side, students and job candidates are using Generative AI (like ChatGPT) to produce "perfect" essays and CVs in seconds. On the other side, institutions are rushing to buy AI marking tools to grade that work just as fast.

It is a closed loop of machines grading machines. And in the middle of this loop, the human element - the actual understanding of quality - is quietly disappearing.

This creates a massive risk for schools, exam boards, and employers. We know that AI models are "Black Boxes." They hallucinate. They can be tricked by complex vocabulary ("The Thesaurus Trap"). They can give an 'A' to an essay that is grammatically flawless but factually nonsense. They can rank a candidate as "Top Tier" simply because their CV was optimized for the right keywords, not because they have the right skills.

This leads to the single biggest strategic question in assessment today:

"How do we enjoy the speed of AI without risking the integrity of the result?"

The answer lies in the cognitive science we explored in Part 1 and Part 2 of this series. We don't need to choose between Human and AI. We need to use Human Judgement as the Validation Layer - the "Anchor" - that keeps the AI honest.

The "Ground Truth" Paradox

To understand why relying solely on AI is dangerous, you first have to understand how AI learns.

AI models are hungry for "Ground Truth." To teach a machine to grade Math, you feed it millions of equations where 2 + 2 = 4. The Ground Truth is absolute. The AI learns the rule, and it never fails.

But how do you teach an AI to grade an English essay, a Fine Art portfolio, or a senior management CV?

The Ground Truth Paradox: In subjective assessment, there is no single "correct" answer.

Historically, we have tried to solve this by writing Rubrics - lists of text rules like "Uses sophisticated vocabulary" or "Shows critical thinking." But as we discussed in Post 1, text is a "Modal" trap.

- An AI can identify "sophisticated vocabulary" (keywords).

- It cannot identify if that vocabulary is being used to mask a lack of understanding.

When you train an AI on rubrics, you aren't teaching it to judge quality; you are teaching it to mimic the rules of quality. This is why we see "perfect" AI-generated CVs that are actually hollow, and why AI markers often give high scores to hallucinated waffle.

The Solution: Manufacturing Truth

If rules don't work, what does? Consensus.

In the human world, "Ground Truth" isn't a rulebook; it is a shared understanding between experts. When five experienced hiring managers all look at a candidate and say, "This is the one," that consensus is the truth.

RM Compare allows you to capture this. By aggregating the comparative judgements of human experts, we distill fuzzy, subjective opinions into a hard data point. We don't ask humans to follow a rule (which AI can game); we ask them to find the truth (which AI cannot).

The Mechanism: The "Validation Layer"

So, how do we solve the paradox? We don't try to stop the AI revolution. We anchor it.

At RM Compare, we have developed a methodology we call the Validation Layer. It is based on a simple principle: AI provides Speed; Humans provide Truth.

Here is how the workflow operates:

- The AI Pass: You use your AI tools to mark 100% of the artifacts (essays, CVs, portfolios) at lightning speed. This gives you a preliminary dataset.

- The Human Anchor: Simultaneously, you run a "dip sample" (e.g., 10-20%) of those same artifacts through RM Compare. Human experts judge them using Adaptive Comparative Judgement (ACJ).

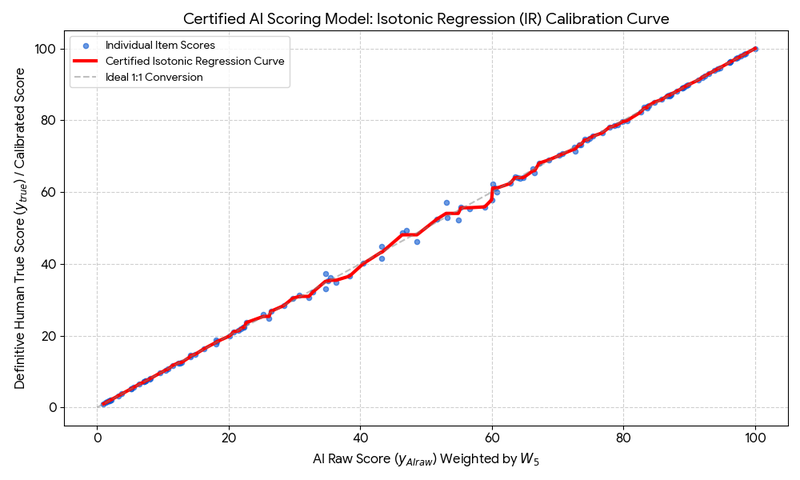

- The Calibration: We overlay the two datasets.

The "Hallucination Detector" This overlay creates a powerful diagnostic tool. We can plot the AI’s scores against the Human Consensus rank.

- When the data aligns: The AI is performing well. You can trust the automated results.

- When the data diverges: This is the critical signal. We call this Misfit.

The "Hallucination Detector". This overlay creates a powerful diagnostic tool. We can plot the AI’s scores against the Human Consensus rank.

- When the data aligns: The AI is performing well. You can trust the automated results.

- When the data diverges: This is the critical signal. We call this Misfit.

This divergence is not random error. It is the Amodal Gap appearing in your data.

It happens exactly when the artifact is complex, creative, or nuanced. The AI might have given a high score because it found the right keywords (Modal Completion), but the Human Consensus ranked it low because the argument lacked logic (Amodal Completion).

By treating Human Judgement as the "Anchor," you create a safety net. You can let the AI handle the mundane 80% of the work, while the system automatically flags the risky 20% for human review. You get the efficiency of automation without sacrificing the validity of the assessment.

The Proof: Real World "Anchors"

This concept of using human judgement to validate and correct data isn't just a theory. It is already happening in high-stakes environments.

Case Study 1: Recruitment (Correcting the AI Bias) In the financial sector, Amplify Trading faced a classic "Black Box" problem. Traditional screening (often automated by ATS) relies on the CV—a text document easily polished by AI.

- The Solution: Instead of screening text, they asked candidates to perform a live simulation (a work sample).

- The Anchor: Future peers and colleagues judged the output using RM Compare.

- The Result: The human judges didn't look for keywords. They used Social Amodal Completion to infer future performance from the candidates' actions. They found high-potential talent that the algorithm - blinded by "imperfect" CVs - would have rejected. The humans corrected the machine's blind spots.

Case Study 2: Standard Setting (Creating the Ground Truth) When the Independent Schools Examination Board (ISEB) launched a new project qualification, they faced a different problem: The Cold Start.

- The Problem: You cannot train an AI to mark a new qualification because there is no historical data. There is no "Ground Truth" yet.

- The Anchor: They used RM Compare to facilitate a judging session with expert teachers.

- The Result: By comparing student work, the judges emerged the standard. They created the "Ground Truth" from scratch. This proves a fundamental rule of the AI era: AI can follow a standard, but only humans can invent one.

In both cases, RM Compare provided the necessary human layer that turned raw data into trusted, valid outcomes.

Conclusion: The Hybrid Future

For the last few years, the debate in assessment has been binary: Human vs. AI.

Traditionalists argued that AI can never understand quality. Futurists argued that humans are too slow and expensive.

The truth, as always, is somewhere in the middle. We cannot go back to a world where every single artifact is manually reviewed by a human—the volume is simply too high. But we also cannot sprint blindly into a future where "Black Box" algorithms decide our grades and our careers without supervision.

The future of assessment is Hybrid.

It is a future where AI provides the Scale, and Humans provide the Standard.

- Use AI to handle the volume.

- Use RM Compare to handle the nuance.

- Use the Validation Layer to ensure the two are telling the same story.

Your organisation doesn't need to choose between speed and safety. By anchoring your AI strategy in high-fidelity human consensus, you can have both.

Don't just automate. Validate.