Blog

Posts for category: AI & ML

-

Why the Discrepancy Between Human and AI Assessment Matters—and Must Be Addressed

As AI-powered tools increasingly participate in educational assessment, a critical challenge comes into sharp focus: AI judges very differently from humans. This difference is more than technical; it threatens the fairness, trust, and validity of assessment systems if left unaddressed.

-

New 6 Part Blog Series - "All Judgements Are Comparisons”: The Human Foundation Beneath AI Assessment

At the heart of every debate around assessment, whether about student work, creative output, or performance reviews, lies a deceptively simple truth that all judgements are comparative. This insight isn’t just a philosophical curiosity, it is the key to understanding why trust and validity in marking are so challenging in an era of artificial intelligence.

-

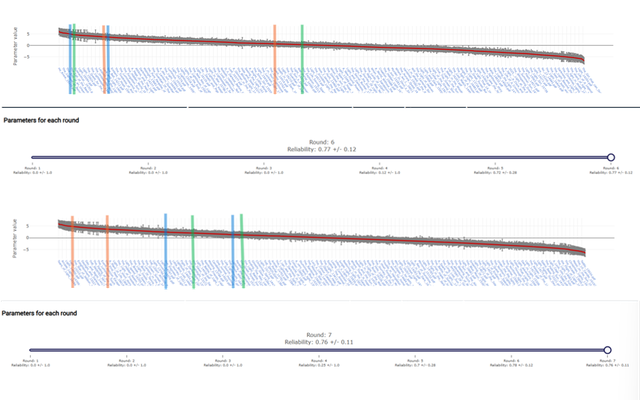

RM Compare as the Gold Standard Validation Layer: Recent Sector Research and Regulatory Evidence

In 2025, the educational assessment sector experienced a step change in the evidence base supporting Comparative Judgement (CJ) as a validation layer—bolstering the RM Compare approach described throughout this paper. Major independent studies, regulatory pilots, and industry-led deployments have converged on the effectiveness, reliability, and transparency that CJ-powered systems provide for AI calibration and human moderation alike.

-

Blog Series Introduction: Can We Trust AI to Understand Value and Quality?

Artificial intelligence is becoming deeply embedded in how we teach, learn, and assess. But as LLMs and automated marking tools step into spaces once reserved for humans, a fundamental question emerges: can AI truly understand what we mean by quality and value?

-

Variation in LLM perception on value and quality

Can LLM's understand concepts of 'value' and 'quality' in the same way as humans do? If not what does this mean for AI assessment? We completed a short study to explore more, and to think about some of the implications.

-

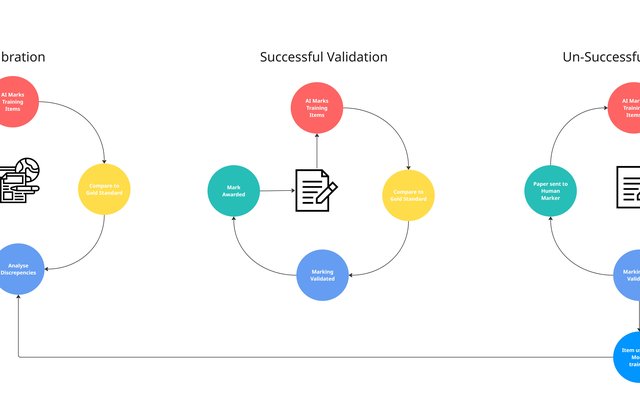

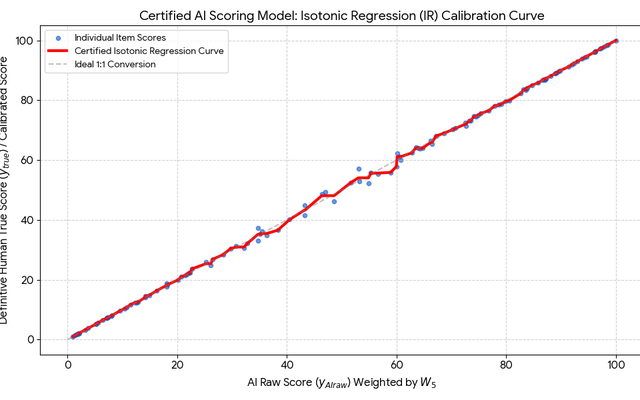

Fairness in Focus: The AI Validation Layer Proof of Concept Powered by RM Compare

In today’s rapidly changing educational landscape, the key challenge isn’t just whether AI can mark student work, but how to ensure every mark is reliably fair. With that mission in mind, our latest proof of concept was designed to demonstrate why RM Compare is uniquely positioned as the foundation for trustworthy, scalable automated assessment.

-

Building Trust: From “Ranks to Rulers” to On-Demand Marking

The foundations of modern assessment are shifting. Not long ago, RM Compare introduced “ranks to rulers”: a pioneering process that transformed human judgments—ranking student work by quality—into reliable, calibrated measuring scales that educators and students could trust. Now, new AI validation methods are taking these ideas further, making instant, trustworthy assessment a reality for everyone.

-

Who is Assessing the AI that is Assessing Students?

As AI steps into the heart of education, we celebrate the speed and efficiency of machine-marked assessments. But a deeper question shadows every advance: If an AI can now judge student work, who—if anyone—is judging the AI? Could an RM Compare AI Validation Layer be the answer?

-

'Cognitive Offloading', 'Lazy Brain Syndrome' and 'Lazy Thinking' - unintended consequences in the age of AI

Generative AI and LLMs are everywhere in 2025 classrooms, making teaching and learning faster than ever. But as research in the Science of Learning shows, letting technology do too much of the thinking for us—“cognitive offloading”—weakens the very skills education aims to build.