Blog

Posts for category: Product

-

New - Introducing the new RM Compare Modular Ecoystem

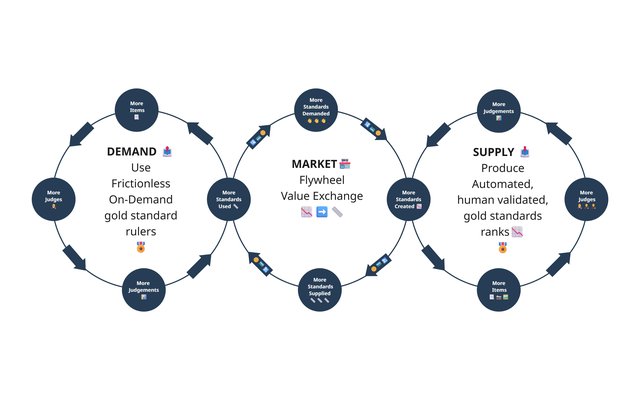

For years, RM Compare has helped teachers, exam boards, universities and recruiters turn thousands of “which is better?” decisions into fair, reliable rankings of complex work. What began as a powerful way to compare pieces of work has now grown into something bigger: a complete ecosystem for creating, managing, and using gold‑standard judgements at scale. Today we’re introducing RM Compare | Live, Studio, Hub – three modules that work together as a continuous flywheel.

-

RM Compare has grown up

What began as a powerful way to compare pieces of work is now a complete, production‑ready layer of judgement infrastructure that sits underneath your existing systems and makes subjective assessment fair, fast, and repeatable at any scale. We’ve reached the point Geoffrey Moore calls the “whole product”: not just the clever core technology, but everything wrapped around it that a mainstream organisation needs to trust it with real‑world stakes.

-

New! Create interactive reports with your LLM (Experiment)

All RM Compare sessions can allow you to extract very detailed data sets (Advanced and Enterprise Plans). We already provide you with some great reporting, however the latest Generative AI tools might be able to give you even greater insights.

-

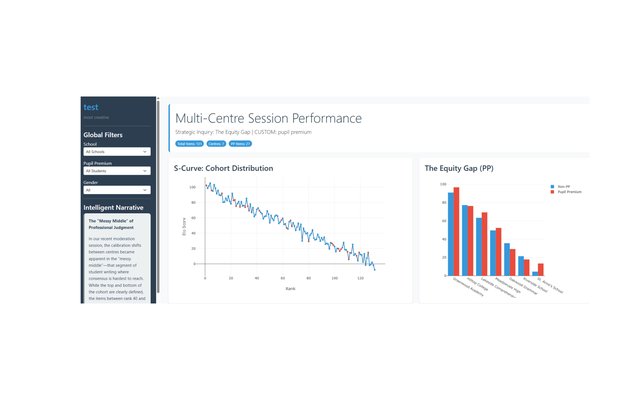

Preparing for a New Era of Trust Accountability: How RM Compare Supports MAT Leaders Through Statutory Inspections

The education landscape for multi-academy trusts is about to change fundamentally. With the government confirming statutory inspections of academy trusts from as early as 2027, MAT leaders now face a critical question: Is your assessment strategy inspection-ready?

-

📳Introducing the new RM Compare Companion App

The RM Compare Companion App transforms professional judgment into a mobile-first experience. Quickly grab and add Items to your RM Compare sessions.

-

The 90% Problem: Why "Dip Sampling" Can No Longer Protect Your Provision

The 2025 apprenticeship assessment reforms have shifted responsibility for quality assurance decisively toward training providers. With the launch of Skills England and new flexibility in assessment delivery, providers are no longer just preparing learners - they are increasingly validating them.

-

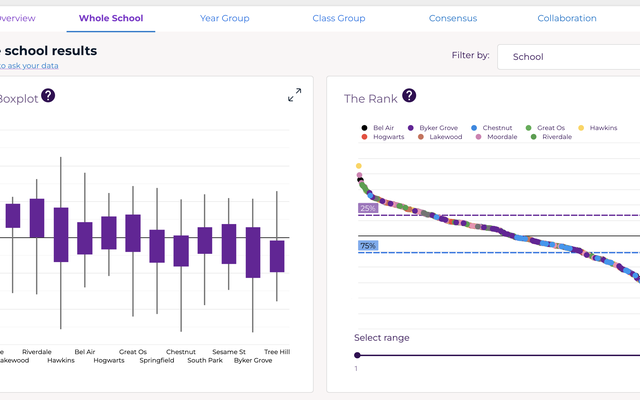

Designing Healthy RM Compare Sessions: Build Reliability In, Don’t Inspect It In

The best way to avoid the “we worked hard and reliability is low” moment is to design sessions so that health is baked in from the start. Healthy sessions are not accidents: they result from clear purpose, good task design, well‑briefed judges, and enough comparisons to let the model discover a shared scale of quality. This post turns what you now know about rank order, judge misfit and item misfit into concrete design principles you can apply before, during and after a session.

-

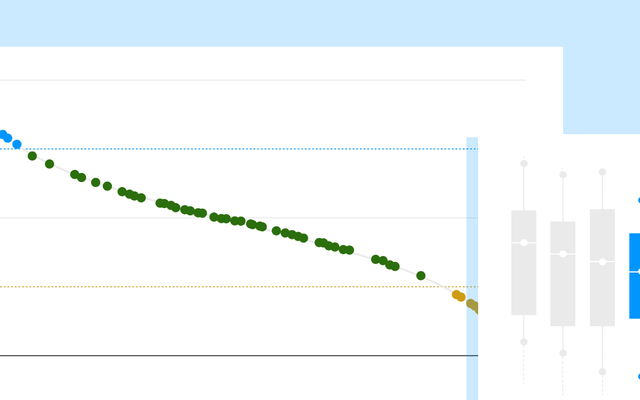

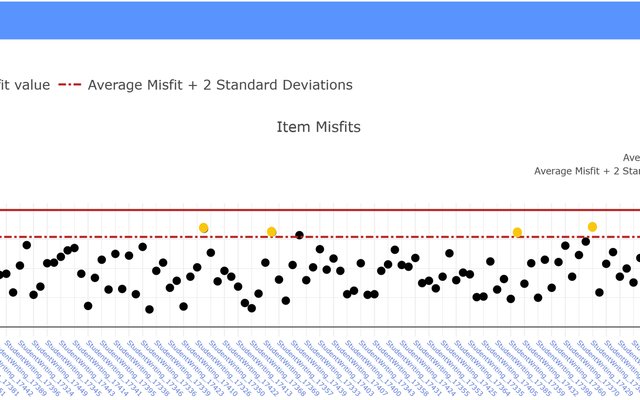

Item Misfit: Listening to What the Work Is Telling You

If judge misfit shows where people see things differently, item misfit shows where the work itself is provoking disagreement. In an RM Compare report, item misfit does not mean “bad work” or “faulty items.” It means, very specifically, “here are the pieces of work that your judging pool did not see in the same way.” Those items are often where your assessment task, your construct, and your judges’ thinking come most sharply into focus.

-

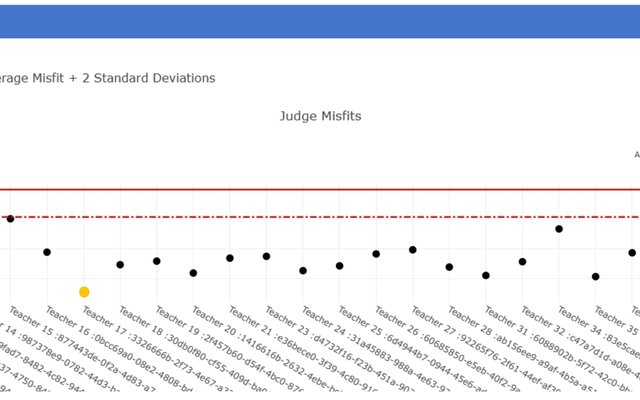

Judge Misfit: Making Sense of Agreement and Difference

When users first encounter the judge misfit graph in an RM Compare report, the immediate worry is often: “Have my judges done it wrong?” The term “misfit,” red threshold lines, and dots floating above them can feel like an accusation. This post reframes judge misfit as a structured way of seeing where judges are not sharing the same view of value as everyone else, and therefore where the richest professional conversations and the most important quality checks can happen.